While Tesla’s self-driving technology is deemed to trail the capability of many other systems – according to a 2020 Consumer Reports study a full 12 points on a 100-point scale behind Cadillac’s Super Cruise – Autopilot’s FSD continues to be widely perceived as fully self-driving.

So despite written warnings in the Tesla owner’s handbook, operators continue to treat Tesla’s Level-2 Advanced Driver Assist System as if it has L-4 self-driving capability that does not require the driver’s attention. To ensure a certain degree of driver engagement Tesla, amongst others, measures the torque being applied to the steering wheel as an assurance that the driver does indeed have their hands on the wheel.

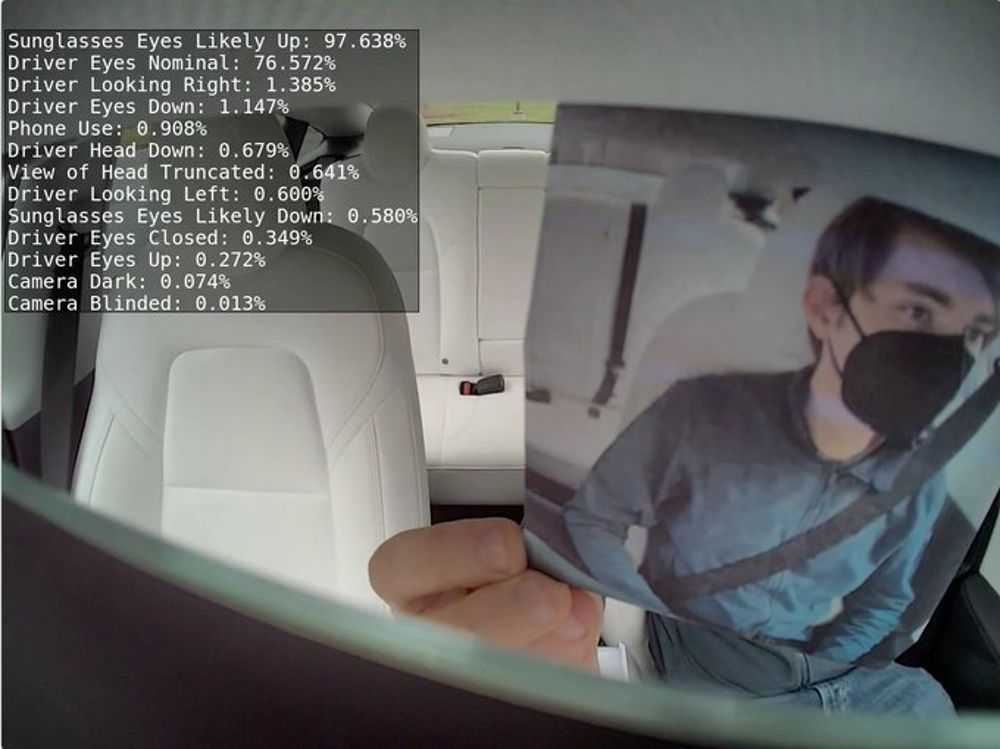

This is where Super Cruise comes into its own with a driver attention monitoring system that does not rely on steering wheel torque alone to gauge driver attention. Instead, Cadillac uses cameras to actively monitor the position of the driver's eyes while the system is engaged, thereby ensuring that the driver’s focus is where it needs to be for safe, hands-free operation.

But even as criticism of Tesla's lack of safety protocol around driver engagement mounts, the company announced in mid-September its intention of rolling out a software update to enhance its full self-driving capability. The update adds new driving assistance features for navigating around cities.

Government safety officials, however, say Tesla needs to slow down and address basic safety issues before expanding the tech to more drivers.

These are the basic safety issues Tesla needs to address before expanding FSD

The new head of the National Transportation Safety Board (NTSB), Jennifer Homendy, called the Full Self-Driving name "misleading and irresponsible," saying in a recent interview with The Wall Street Journal that consumers pay more attention to marketing than warnings in vehicle owners manuals.

During the interview, conducted shortly after Tesla announced the city driving software upgrade, Homendy warned that "basic safety issues have to be addressed" before Tesla expands its technology.

This is however not the first time the NTSB has been critical of Tesla’s self-driving system. In a report on a 2016 fatal crash involving a Model S with Autopilot engaged, it concluded that Tesla lacked "system safeguards to prevent misuse." In other words, there was insufficient system-driven assurance that the driver was indeed engaged in the driving process.

However, while it investigates crashes, the NTSB is not a regulator, though it's viewed as a leading voice in transportation safety. The agency is tasked with investigating crashes and issuing safety recommendations that can be directed toward companies or even regulators, but they don't have enforcement authority, and can't compel Tesla to act. That power lies with the National Highway Traffic Safety Administration (NHTSA), which is the federal auto safety regulator.

Also concerned about the safety of Tesla’s automated driving system, in August the NHTSA launched an investigation into a series of crashes in which Teslas that had been operating with the advanced driver assistance system engaged had run into one or more parked emergency vehicles.

This is, however in its very early stages. The agency has requested data from Tesla and other automakers, but it's going to likely take a while for the investigation to make its findings known or draw any conclusions.

Whilst Homendy’s warning must be taken seriously it should not be seen as an inference that Tesla is ignorant of the basic safety issues it faces - particularly with regard to effectively monitoring driver engagement.

Here's What Tesla Is Doing To Address Basic Safety Issues

Driver monitoring systems will be a part of the requirements for Europe’s Euro NCAP automotive safety program as of 2023. As active driving assistance systems become increasingly automated this is likely to be adopted by other agencies around the world.

Possibly in response to increasing criticism and a possible regulatory backlash, Tesla has begun rolling out a new in-cabin driver monitoring system. Even though Musk has spoken out against such systems in the past, Tesla's cars already have an in-cabin camera, making it fairly easy to launch an over-the-air software update, version 2021.4.15.11., which allows the camera to "watch" the driver.

The cabin camera above the rearview mirror can now detect and alert driver inattentiveness while Autopilot is engaged. Camera data does, however, not leave the car itself, which means the system cannot save or transmit information unless data sharing is enabled.

The new update coincides with the company’s release of its new Tesla Vision system that solely relies on computer vision using cameras and no radar.

In another safety-motivated update, a few weeks after the NHTSA launched an investigation into Tesla crashes into emergency vehicles, the automaker says that its Autopilot driver-assist system can now detect emergency lights and slow the vehicle down. But only at night.

If an updated Tesla detects emergency vehicle warning lights when using Autosteer at night on a high-speed road, the driving speed is automatically reduced and the touchscreen displays a message informing the driver to slow down. A recently updated version of the driver's manual explains: "You will also hear a chime and see a reminder to keep your hands on the steering wheel. When the light detections pass by or cease to appear, Autopilot resumes your cruising speed. Alternatively, you may tap the accelerator to resume your cruising speed.”

What is more, Musk said with the rollout of FSD Beta v10.0.1 starting September 24 the company will use personal driving data to determine whether owners who have paid for its controversial “Full Self-Driving” software can access the latest beta version that promises more automated driving functions.

Although safety is of paramount importance, Musk is painfully aware that any delay in the deployment of FSD will have a negative impact on the industry’s attempts to reduce the number of unnecessary road fatalities caused by human error. Even a less-than-perfect self-driving system could on average save lives.