Last Saturday, April 17, in Spring, Texas in the US, a Tesla car drove off the road, crashed into a tree, and caught fire. As a result, two passengers died. Tesla CEO Elon Musk later claimed in a tweet that the data logs recovered from the crashed Model S show that Autopilot has not been turned on, which begs the question of how the car could have been moving at all if that was the case.

Presumably the car could not operate at all without a driver if the autopilot system was off, and shouldn't be able to even if the system was on. While it remains what happened in Spring, Texas, experts from the American publication Consumer Reports have determined that it is easy to trick Tesla's systems into autopilot driving with an empty driver's seat.

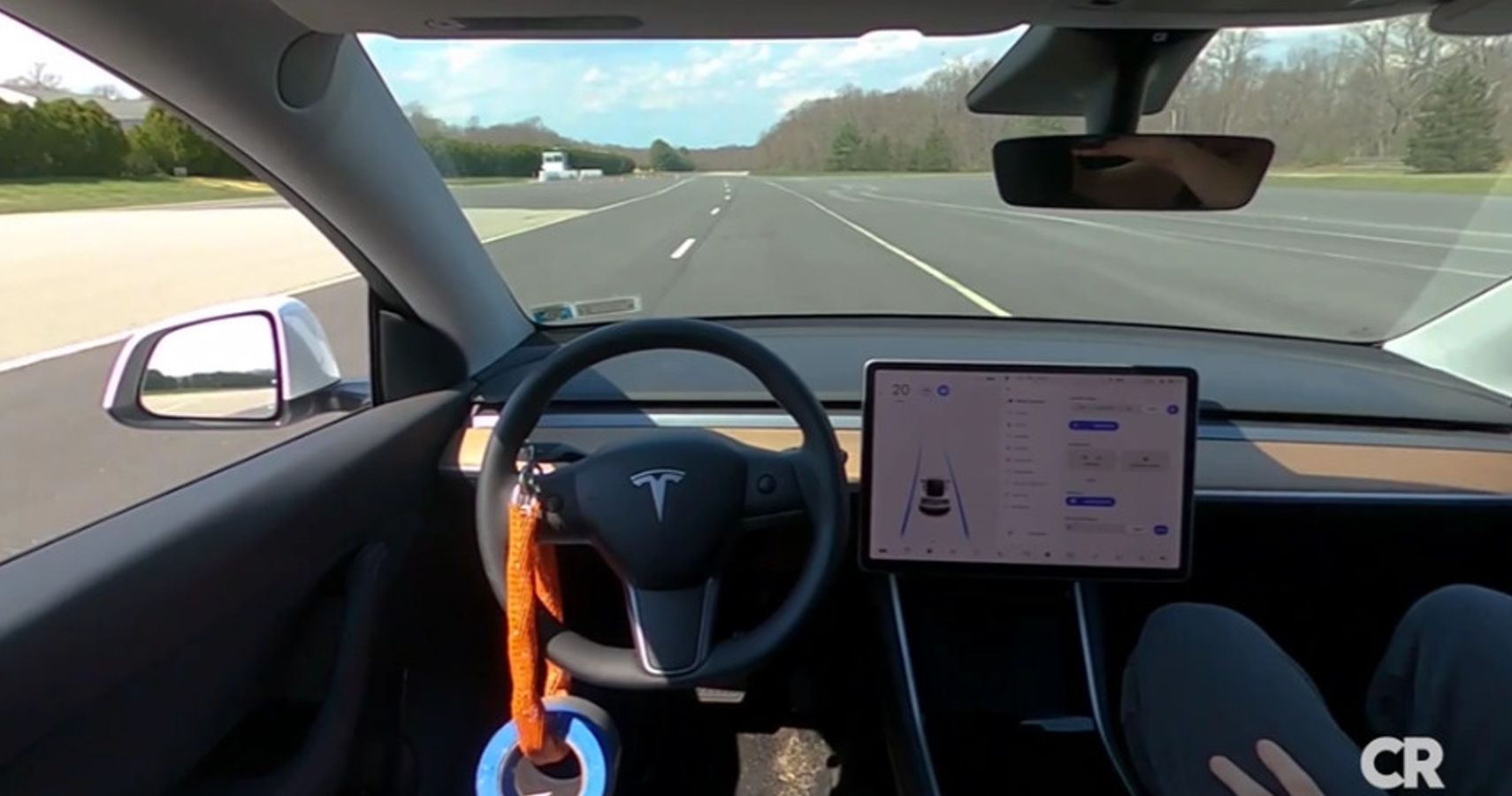

Tesla electric cars are equipped with a special "Autopilot" system, but the automaker insists that it is auxiliary. Therefore, when using it, the driver must keep his hands on the steering wheel and control the situation. Like similar systems from other brands, the car will constantly remind the driver of this if it detects no hands on the wheel. Consumer Reports experts decided to check how this system works and whether it is possible to cheat on it. As a result, they were surprised at how easily they managed to do it.

How Did Experts Trick The Tesla Autopilot System?

Tests were carried out by journalists on a closed test track. They took their 2020 Tesla Model Y, put it on autopilot, and then simply hung a chain with additional weight on the steering wheel, which was supposed to mimic the weight of a human hand. And the car automatically drove along the road, despite the fact that there was no one in the driver's seat, no one held the steering wheel, and no one was looking at the road.

“In our evaluation, the system not only failed to make sure the driver was paying attention, but it also couldn’t tell if there was a driver there at all,” said Jake Fisher, senior director of vehicle testing at Consumer Reports, who conducted the experiment. Next, he added that it was a little scary to realize how easy it was to bypass security measures.

Tesla Does Not Pay Enough Attention To Autopilot Safety Measures

As the publication notes, Tesla is clearly lagging behind other automakers in protective measures using autonomous driving systems in their cars. For example, Ford, GM, BMW, and Subaru use video cameras that track the driver's eye movements, making sure they are looking at the road. As the incidents keep racking up, Tesla should probably consider doing the same.

Source: Consumer Reports